One of the challenges when designing AI agents is how to support people in understanding their responses. Making AI outputs explainable is important for the accountability of AI decisions in critical domains like law and medicine. In everyday life, however, technical and static AI explanations are often not effective, and in some cases are not even inclusive, as they position the user (and others affected by AI) as passive recipients. Thus, there is a real need to improve our everyday understanding of AI, allowing users to develop more implicit ways of understanding AI through interaction and experimentation. In this context, misunderstandings become meaningful interactions for people to understand what agents can and cannot do.

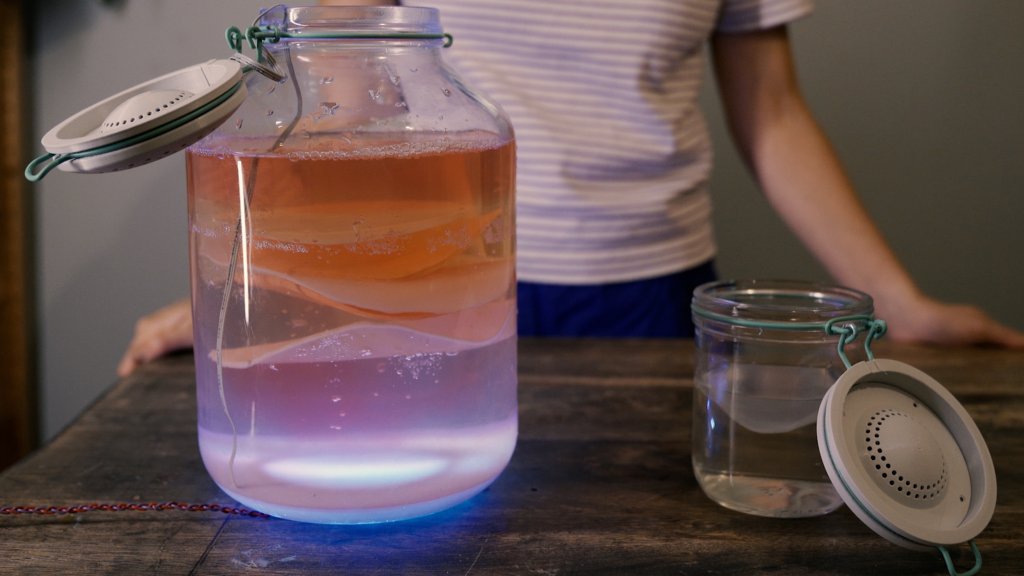

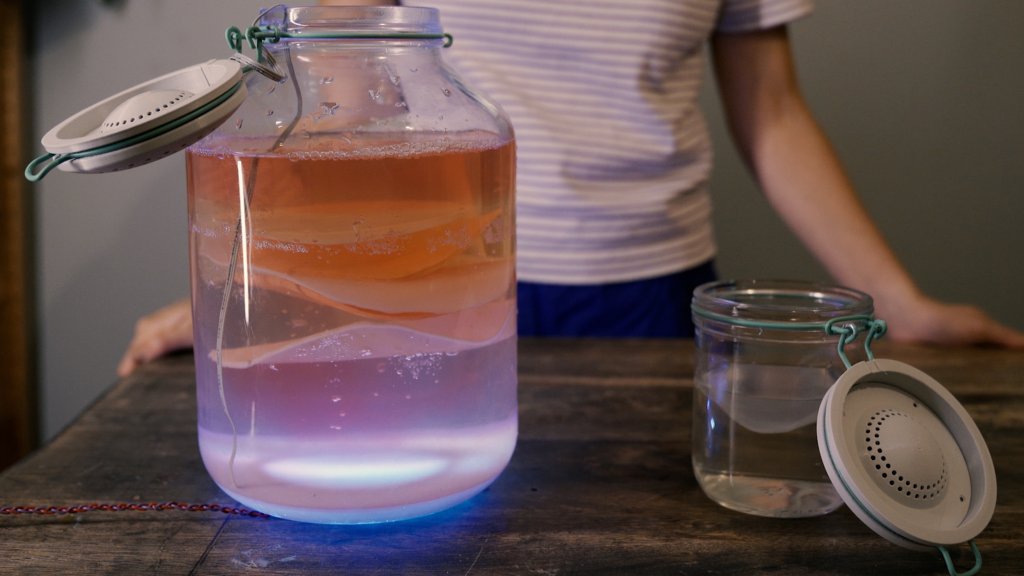

This project takes a more-than-human design orientation to consider the agency of both people and agents in everyday (mis)understandings of AI. It uses the metaphor of growing things to imagine alternative interactions with conversational agents, in which, practices of sharing, experimenting, caring, and repairing could be valuable starting points to imagining agents that are more situated, responsible and response-able.

The project is part of my PhD, which is supervised by Elisa Giaccardi and Johan Redström, and funded by a Microsoft Research PhD Fellowship. Focusing on more-than-human design and the posthumanist turn in HCI, this project provides an example of how we might decenter humans in the design process and design for the entanglements between humans and non-humans, from intelligent agents to other species.

The design process includes several more-than-human design methods: thing ethnography (Giaccardi 2016), conversations with agents (Nicenboim, 2020), AI metaphors (Murray-Rust, 2022) and noticing (Liu, 2019).